The progressive understanding of addiction as a disease rather than a choice has opened the door to better treatment and research, but there are aspects of addiction that make it uniquely difficult to treat.

One exceptional characteristic of addiction is its persistence even in the absence of drug use: during periods of abstinence, symptoms get worse over time, and response to the drug increases.

Researcher Elizabeth Heller, PhD, of the University of Pennsylvania Epigenetics Institute, is interested in understanding why we observe this persistence in symptoms even after drug use, the initial cause of the addiction, is stopped. Heller, who spoke at a Jan. 18 biochemistry seminar, believes the answer lies in epigenetic regulation.

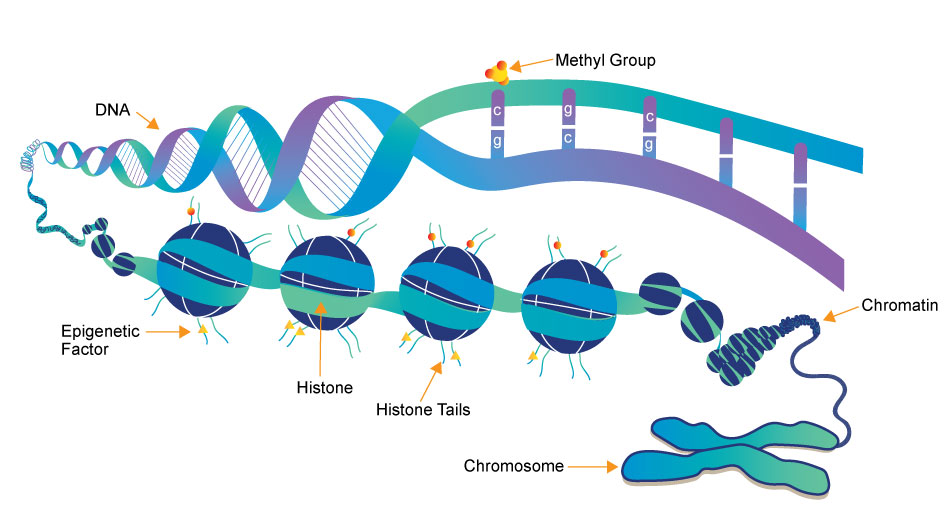

Epigenetic regulation represents the nurture part of “nature vs. nurture.” Without changing the actual sequence of DNA, we have mechanisms in our body to control how and when cells express certain genes. These mechanisms are influenced by changes in our environment, and the process of influencing gene expression without altering the basic genetic code is called epigenetics.

Heller believes that we can understand the persistent nature of the symptoms of drugs of abuse even during abstinence by considering epigenetic changes caused by the drugs themselves.

To investigate the role of epigenetics in addiction, specifically cocaine addiction, Heller and her team have developed a series of tools to bind to DNA and influence expression of the molecules that play a role in epigenetic regulation, which are called transcription factors. They identified the FosB gene, which has been previously implicated as a regulator of drug addiction, as a site for these changes.

Increased expression of the FosB gene has been shown to increase sensitivity to cocaine, meaning individuals expressing this gene respond more than those not expressing it. Heller found that cocaine users show decreased levels of the protein responsible for inhibiting expression of FosB. This suggests cocaine use itself is depleting the protein that could help regulate and attenuate response to cocaine, making it more addictive.

Another gene, Nr4a1, is important in dopamine signaling, the reward pathway that is “hijacked” by drugs of abuse. This gene has been shown to attenuate reward response to cocaine in mice. Mice who underwent epigenetic changes to suppress Nr4a1 showed increased reward response to cocaine. A drug that is currently used in cancer treatment has been shown to suppress Nr4a1 and, consequently, Heller has shown it can reduce cocaine reward behavior in mice.

The identification of genes like FosB and Nr4a1 and evidence that changes in gene expression are even greater in periods of abstinence than during drug use. These may be exciting leaps in our understanding of addiction, and ultimately finding treatments best-suited to such a unique and devastating disease.

Post by undergraduate blogger Sarah Haurin