Dr. Alex Huth. Image courtesy of The Gallant Lab.

On October 15, I attended a presentation on “Using Stories to Understand How The Brain Represents Words,” sponsored by the Franklin Humanities Institute and Neurohumanities Research Group and presented by Dr. Alex Huth. Dr. Huth is a neuroscience postdoc who works in the Gallant Lab at UC Berkeley and was here on behalf of Dr. Jack Gallant.

Dr. Huth started off the lecture by discussing how semantic tasks activate huge swaths of the cortex. The semantic system places importance on stories. The issue was in understanding “how the brain represents words.”

To investigate this, the Gallant Lab designed a natural language experiment. Subjects lay in an fMRI scanner and listened to 72 hours’ worth of ten naturally spoken narratives, or stories. They heard many different words and concepts. Using an imaging technique called GE-EPI fMRI, the researchers were able to record BOLD responses from the whole brain.

Dr. Huth explaining the process of obtaining the new colored models that revealed semantic “maps are consistent across subjects.”

Dr. Huth showed a scan and said, “So looking…at this volume of 3D space, which is what you get from an fMRI scan…is actually not that useful to understanding how things are related across the surface of the cortex.” This limitation led the researchers to improve upon their methods by reconstructing the cortical surface and manipulating it to create a 2D image that reveals what is going on throughout the brain. This approach would allow them to see where in the brain the relationship between what the subject was hearing and what was happening was occurring.

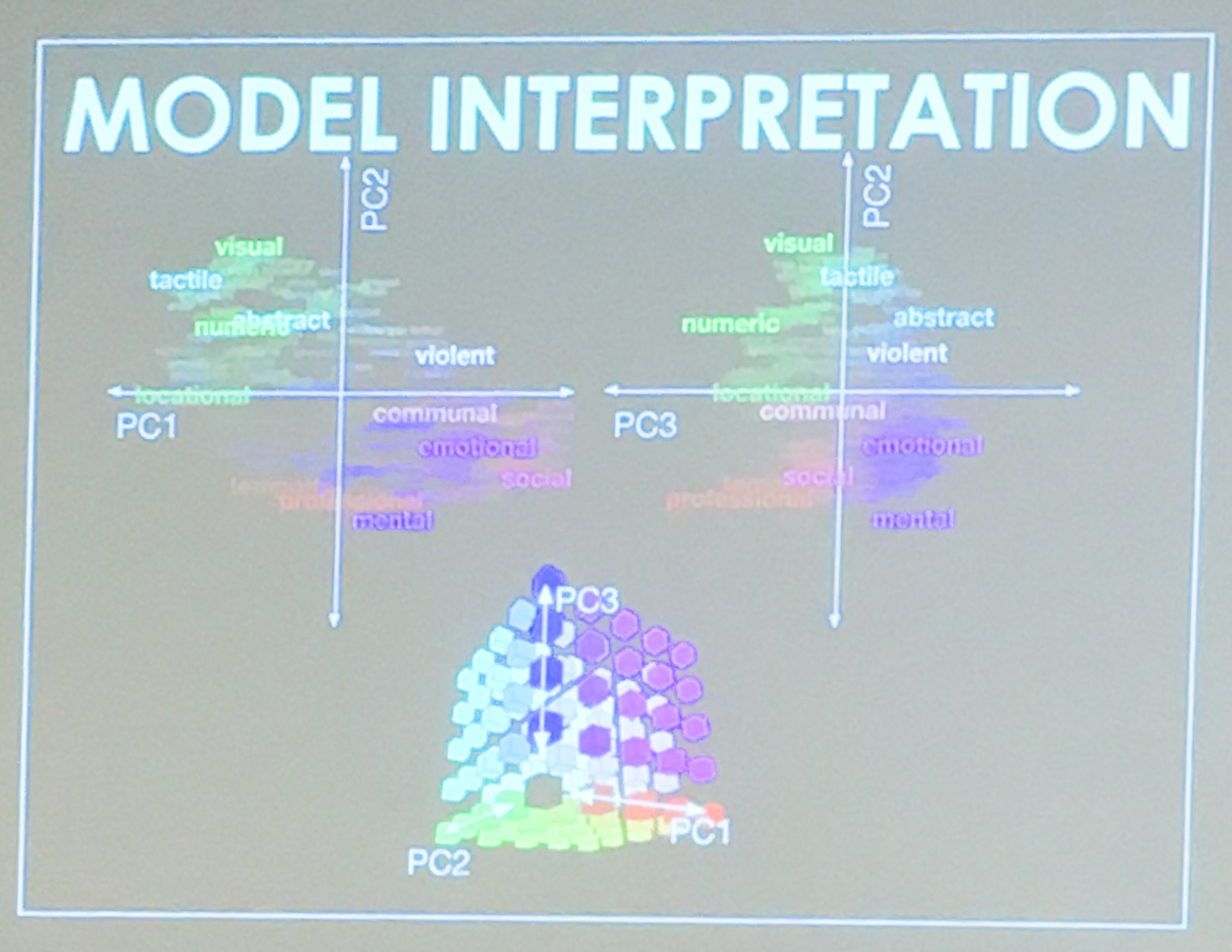

A model was then created that would require voxel interpretation, which “is hard and lots of work,” said Dr. Huth, “There’s a lot of subjectivity that goes into this.” In order to simplify voxel interpretation, the researchers simplified the dimensional subspace to find the classes of voxels using principal components analysis. This meant that they took data, found the important factors that were similar across the subjects, and interpreted the meaning of the components. To visualize these components, researchers sorted words into twelve different categories.

The Four Categories of Words Sorted in an X,Y-like Axis

These categories were then further simplified into four “areas” on what might resemble an x , y axis. On the top right was where violent words were located. The top left held social perceptual words. The lower left held words relating to “social.” The lower right held emotional words. Instead of x , y axis labels, there were PC labels. The words from the study were then colored based on where they appeared in the PC space.

By using this model, the Gallant could identify which patches of the brain were doing different things. Small patches of color showed which “things” the brain was “doing” or “relating.” The researchers found that the complex cortical maps showing semantic information among the subjects was consistent.

These responses were then used to create models that could predict BOLD responses from the semantic content in stories. The result of the study was that the parietal cortex, temporal cortex, and prefrontal cortex represent the semantics of narratives.

Post by Meg Shieh

Post by Meg Shieh