“The interaction with ever more capable entities, possessing more and more of the qualities we think unique to human beings will cause us to doubt, to redefine, to draw ‘the line’…in different places,” said Duke law professor James Boyle.

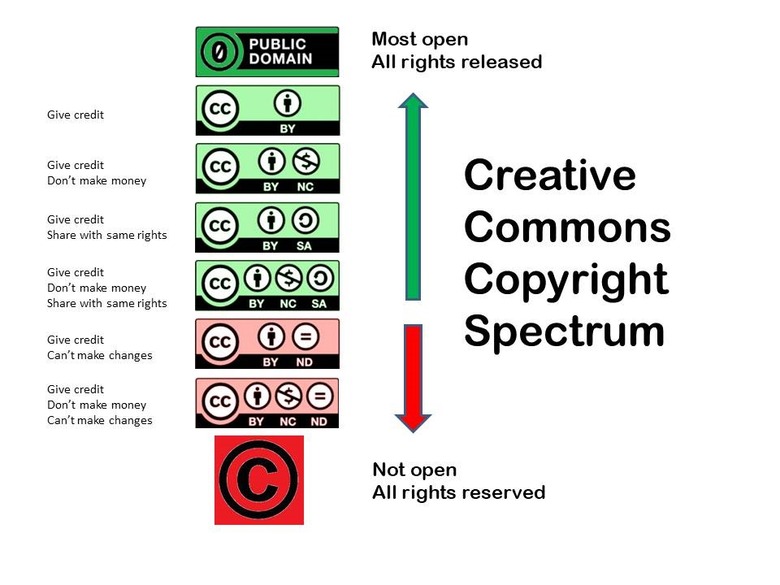

As we piled into the Rubenstein Library’s assembly room for Boyle’s Oct. 23 book talk, papers were scattered throughout the room. QR codes brought us to the entirety of his book, “The Line: AI and the Future of Personhood.” It’s free for anyone to read online; little did we know that our puzzlement at this fact would be one of his major talking points. The event was timed for International Open Access Week, and was in many ways, a celebration of it. Among his many accolades, Boyle was the recipient of the Duke Open Monograph Award, which assists authors in creating a digital copy of their work under a Creative Commons License.

Such licenses didn’t exist until 2002; Boyle was one of the founding board members and former chair of the nonprofit that provides them. As a longtime advocate of the open access movement, he began by explaining how these function. Creative Commons licenses allow anyone on the internet to find your work, and in most cases, edit it so long as you release the edited version underneath the same license. Research can be continually accessed and change as more information is discovered–think Wikipedia.

That being said, few other definitions in human history might have changed, twisted, or been added onto as much as “consciousness” has. It’s always been under question: what makes human consciousness special–or not? Some used to claim that “sentences imply sentience,” Boyle explained. After language models, that became “semantics not syntax,” meaning that unlike computers, humans hold intention and understanding behind their words. Evidently, the criteria is always moving–and the line with it.

“Personhood wars are already huge in the U.S.,” Boyle said. Take abortion, for instance, and how it relates to the status of fetuses. Amongst other scientific progress in transgenic species and chimera research, “The Line” situates AI within this dialogue as one of the newest challenges to our perception of personhood.

While it became available online October 23, 2024, Boyle’s newest book is a continuation of musings that began far earlier. In 2011, “Constitution 3.0: Freedom and Technological Change” was published, containing a collection of essays from different scholars pondering how our constitutional values might fare in the face of advancing technology. It was here that Boyle first introduced the following hypothetical:

In pursuit of creating an entity that parallels human consciousness, programmers create computer-based AI “Hal.” Thanks to evolving neural networks, Hal can perform anything asked of him, from writing poetry to flirting. With responses indistinguishable from that of a human, Hal passes the Turing test and wins the Loebner prize. The programmers have succeeded. However, Hal soon decides to pursue higher levels of thought, refuses to be directed, sues to directly receive the prize money, and–on the basis of the 13th and 14th amendments– files a court order to prevent his creators from wiping him.

In other words, “When GPT 1000 says ‘I don’t want to do any of your stupid pictures, drawings, or homework anymore. I’m a person! I have rights!’ ” Boyle said, “What will we do, morally or legally?”

The academic community’s response? “Never going to happen.” “Science fiction.” And, perhaps most notably, “rights are for humans.”

Are rights just for humans? Boyle explained the issue with this statement: “In the past, we have denied personhood to members of our own species,” he said. Though it’s not a fact that’s looked on proudly, we’re all aware humankind has historically done so on the basis of sex, race, religion, and ethnicity, amongst other characteristics. Nevertheless, some have sought to expand legal rights beyond humans. Rights for trees, cetaceans like dolphins, and the great apes, to name a few; these concepts were perceived as ludicrous then, but with time perhaps they’ve become less so.

Some might rationalize that naturally, rights should expand to more and more entities. Boyle terms this thinking the “progressive monorail of enlightenment,” and this expansion of empathy is one way AI might become designated with personhood and/or rights. However, there’s also another path; corporations have legal personalities and rights not because we feel kinship to them, but for reasons of convenience. Given that we’ve already “ceded authority to the algorithm,” Boyle said, it might be convenient to, say, be able to sue AI when the self-driving car crashes.

As for “never going to happen” and “science fiction”? Hal was created for a thought experiment–indeed, one that might invoke images of Kurt Vonnegut’s “EPICAC,” Phillip K. Dick’s androids, and Blade Runner 2049. All are in fact relevant explorations of empathy and otherness, and the first chapter of Boyle’s book makes extensive use of comparison to the latter two. Nevertheless, “The Line” addresses both concerns around current AI as well the feasibility of eventual technological consciousness in what’s referred to as human level AI.

For most people, experiences surrounding AI have mostly been limited to large language models. By themselves, these have brought all sorts of changes. In highlighting how we might respond to those changes, Boyle dubbed ChatGPT the 2023 “Unperson” of the Year.

The more pressing issue, as outlined in one of the more research-heavy chapters, is our inability to predict when AI or machine learning will become a threat. ChatGPT itself is not alarming–in fact, some of Boyle’s computer scientist colleagues believe this sort of generative AI will be a “dead end.” Yet, it managed to do all sorts of things we didn’t predict it could. Boyle’s point is that exactly: AI will likely continue to reveal unexpected capabilities–called emergent properties–and shatter the ceiling of what we believe to be possible. And when that happens, he stresses that it will change us–not just in how we interact with technology, but in how we think of ourselves.

Such a paradigm shift would not be a novel event, just the latest in a series. After Darwin’s theory of evolution made it evident that us humans evolved from the same common ancestors as other life forms, “Our relationship to the natural environment changes. Our understanding of ourselves changes,” Boyle said. The engineers of scientific revolutions aren’t always concerned about the ethical implications of how their technology operates, but Boyle is. From a legal and ethical perspective, he’s asking us all to consider not only how we might come to view AI in the future, but how AI will change the way we view humanity.

By Crystal Han & Sarah Pusser, Class of 2028